- This week, we layed out the goals for the project, and discussed possible strategies to acheive those goals.

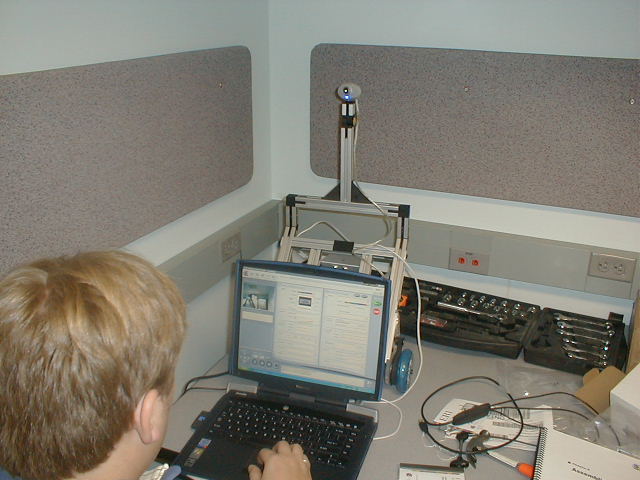

- Built a preliminary robot, as designed and explained in the Evolution kit (below).

- Discussed several possible modifications to the base design to acccomidate our approach.

This week was spent making a few more design decisions and getting used to the included software - the Evolution Control Center. We also spent time beginning to learn Python, which is the only language for which an SDK is currently available. We still have a ways to go, but we are making progress. We've also installed and attempted to use the infrared sensors for obstacle avoidance. According to the manual, we should be able to have a wide range of detection in front of the robot.

We also decided to continue using the current design for the time being, since it has several benefits. It allows us to turn in place, which will be very useful for getting distance measurements from nearly the same location. It also allows us to easily mount the pair of cameras and laser, using the parts included in the expansion kit. We will raise the second camera to the same height as the first, and laser to a similar level - in between the cameras.

Below is a movie of the robot in action. In this movie, LARS is being controlled remotely by a joystick in the Control Center. (download)

This week was spent working with the Python SDK trying to gain some experience programming LARS to perform autonomous tasks. While we were unable to figure out how to interact with the sensors, we were able to make LARS perform several different maneuvers.

The first program we wrote simply made the robot walk forward a short distance. It is below:

from ersp.task import navigation

navigation.MoveRelative(0, [25,0,10])

One of the more complicated maneuvers that we had LARS perform was to move around in a square. The controlling program is still simple, but we used several different commands to do it. In one run, the motion was with regards to absolute position. Another utilized relative motion, where the next coordinates caused the turns. A third version of the controlling program only moves the robot forward, while explicitly stating the turns. The third is shown below.

from ersp.task import navigation

import math

theta = -90.0 * math.pi / 180.0;

dist = 50

print "I'm now at 0,0"

print "gonna move now"

navigation.MoveRelative(0.0, [dist,0,10])

print "i'm now at 50,0"

navigation.TurnRelative(theta)

navigation.MoveRelative(0.0, [dist,0,10])

print "i'm now at 50,-50"

navigation.TurnRelative(theta)

navigation.MoveRelative(0.0, [dist,0,10])

print "i'm now at 0,-50"

navigation.TurnRelative(theta)

navigation.MoveRelative(0.0, [dist,0,10])

print "i'm back at 0,0"

navigation.TurnRelative(theta)

Here is a movie of this code in action. (download)

These weeks were dedicated to attempting to understand where the issues with the SDK were occuring, on our side or Evolution's. In order to have the LARS project complete successfully, it is key that we are able to obtain images from the cameras quickly and efficiently. Retrieving up-to-date data from the infrared sensors would also be incredibly useful, since it would allow us to navigate effectively, and react to obstacles in the environment in a controlled manner.

Unfortunately, it seems that neither of these two goals will be able to be met through the use of the SDK. After reading much of the ER1 community discussion forum, we came across two posts (here and here) which basically stated that IR readings and color readings are currently inaccessable (these may be resolved with the release of the C++ SDK in late March or April). It might still be possible to navigate without direct control over the IR sensors, using the built in obstacle-avoidance system. However, at this point we've only succeeded in simply stopping the robot when an obstacle is detected, rather than maneuvering around it.

As for the camera issue, we have begun investigating alternate solutions, but we have no concrete plans as of yet. The best idea we've come up with so far revolves around interfacing directly with the cameras through driver calls in C++ or another language. This seems like a really large undertaking, and would probably delay the project significantly. However, if the delay is of a reasonable length, and still allows us to complete several of the original goals of LARS, we will probably go ahead with this method. Otherwise, we will need to reevaluate our project for the time being.

After more investigation into the possibilities of using Evolution's Python SDK, we've decided that it is simply unworkable as an environment for the LARS project. This brought us to a major crossroads in our development - determine another solution methodology, or give up the LARS project entirely for a more amenable one. At this point, we felt that giving up on the project is unacceptable, at least until all our options have been exhausted. Therefore, we decided to go forward in our investigation of a C++-based method.

Currently, Evolution has plans to release a more complete and feature-rich C++ SDK at the end of March or beginning of April. While we are hopeful that this release date is correct, and that we might be able to employ it in our project, we are progressing under the assumption that we will not be able to do so. Since we can get the robot to move more or less as we tell it using the Python SDK, we are simply going to use C++ and driver calls to deal with the cameras and proceed from there. If all else fails, we will be able to use the distance measurements we take from the cameras and laser in order to do crude obstacle avoidance (under several assumptions about the environment, such as only having obstacles at a particular height), and from there we should be able to complete many, if not all, of our original goals for LARS.

Over the last two weeks, we've gone forward in two directions, concurrently. We have split our work into attempting to retreive images from the cameras and dealing with the pictures once we have acquired them. Interfacing with the cameras has proven to be difficult, and we are unable to successfully do this. Dealing with the images after they have been acquired, however, has progressed more smoothly.

Once an image has been acquired, we convert it to the Hue, Saturation and Value color-space. HSV is an alternate method of representing colors, in which Hue corresponds to the base color of a pixel, Saturation corresponds to the amount of the base color at that pixel, and Value corresponds to the intensity of the pixel. This provides for easier thresholding of the image, since it isolates each property of the individual pixels. This allows us to more easily determine the position of the laser pointer within the image.

The conversion between RGB and HSV is relatively straightforward.

In the formulas,

0.0 <= R,G,B <= 1.0

-

H = arccos( ((R - G) + (R - B)) / (2 * sqrt((R - G)^2 + ((R - B) * (G - B)))) )

-

S = 1.0 - min(R,G,B) / V

-

V = (R + G + B) / 3

As an example of how pictures are represented in this color space, we have taken a picture of a sunset, and run it through our conversion program. In the HSV composite pictures, the blue component of each pixel represents the hue, the green component represents saturation, and the red component represents the value. For the other pictures, the pixel is scaled according to hue, saturation or value, where white is max value and black is 0.

Each picture links to the set of processed images.

In the final weeks of the project, the scope of the project had to be narrowed once more. Actually acquiring the pictures proved to be more difficult than we had previously thought. Therefore, we decided that, in the interests of completing the interesting portion of the project - successfully navigating using only vision sensing - we would simply take the pictures manually at each step. This, of course, removes much of the autonomous feeling from the project. However, it does still determine the distance and decide what to do on its own. Also, if, in the future, we determine a way to easily capture the images directly, it will be trivial to add that capability to LARS.

Because the release of the C++ SDK was only available under Red Hat Linux 7.3, using an older version of the kernel than that which is readily available, we decided that it would be better to simply use the few features of the Python SDK which we had successfully used early on in our project. Therefore, we continued to run our laptop under Windows XP and did not have to port any of the image processing software that we had previously written. We wrote a set of C++ wrapper functions for the Python SDK calls, and thus were able to run everything from a Visual C++ application.

Another difficulty that we ran into was in the actual image processing. We have two cameras that we attempted to use with LARS. The iRez KritterCam, which came bundled with the ER1, and a Creative WebCam Pro, which we purchased separately. Each image that we captured was first converted to the HSV system. Then, a set of thresholds was used to search the image and determine the location of the laser pointer. Using this method, we could adjust the threasholds so that we were able to accurately locate the laser pointer in the image taken by the WebCam Pro. Unfortunately, the iRez camera exhibited a relatively heavy bias towards red, making it quite difficult to determine the laser position without returning several false positives.

After spending quite a bit of time attempting to adjust the thresholds on the iRez camera, we decided to give up on that camera and simply use one. If time had permitted, we would have purchased a second Creative camera and continued with the binocular vision. However, it did not, and so we went forward with only the one camera.

In order to determine our distance from a given object, we used our image processing software to determine the center of the laser. We then populated a lookup table with number of pixels from the horizontal center of the image and the corresponding distance. This produced better results than our attempts at actually modeling the relationship. Based on this lookup table (with intermediate results weighted between the two binding values), we were able to experimentally determine the distance accurately to within three centimeters, consistently.

Based on these distances, and a simple controlling algorithm, we were able to successfully wall-follow and explore a small area. Thus, we were able to successfully gauge distances and avoid obstacles using only vision processing.