Nomad 200

Brie Finger

Jessica Fisher

February 16, 2003

Avoidance Software

Introduction

One of the most fundamental problems in robotics is developing robots

that can navigate through the world without damaging themselves or

others. There have been many different approaches to accomplishing

this task dating from the early days of robotics (such as the Stanford

Cart experiments and "Shakey" the robot). In order to solve

higher-level problems, test robots must first be taught to safely

move through their world.

The goal of this project is to program the Nomad 200 to travel through

the Libra Complex. The Nomad 200 has arrays of sonar and infrared

sensors mounted on a turret, which can be used to navigate around

obstacles. The Nomad has three synchronized wheels, which are

controlled by a single rotational/translational velocity parameter or

an angle/distance parameter. The Nomad is limited to traveling on

relatively flat ground, unlike such robots as the PolyBots[1], which are designed to mimic natural creatures

and travel over diverse terrains.

In this portion of the project, we have programmed the Nomad to

wander through a map (following corridors) without running into

obstacles. Eventually, we

would like the robot to be able to get from its current location to an

arbitrary location, given a map of its surrounding area. We are using

a simulation

program to test our code without risking damage to the robot. The

code can then be downloaded onto the robot to test in real-world

situations.

Background

The first approach to robot navigation/architecture was the

Sense-Plan-Act (SPA)

approach[2]. In this method, the robot develops a representation of the

world around it using sensors ("sense"), makes a plan of action based

on this representation

("plan"), and carries out that action ("act"). The main problem with this

approach is that during the time when the robot is planning, the world

may change significantly (i.e. if there are moving elements in it).

However, this approach was used somewhat successfully by researchers

at Stanford, although it was most successful in very simple human-made

"worlds."

Rodney Brooks took a new approach to robot architecture with the

"subsumption" method[3]. Brooks advocated the

development of insect-like robots, which reacted in direct response to

sensor input (eliminating the "plan" stage). Such robots would have a

default "wander" behavior that was "subsumed" by behaviors such as

"run away" if the robot got too close to an obstacle. The problem

with this method is that the more things that the robot is capable of,

the more complicated the subsumption design gets, until eventually a

limit is reached. The can-collecting robot developed in 1988[4]

is considered to be the extent of what can be accomplished with this

architecture.

More recently, Erann Gat described a three-layer architecture, which

includes elements of both SPA and subsumption[5]. In this approach, the robot has a "Controller,"

which implements low-level behaviors, a "Sequencer," which determines

the order in which to carry out those behaviors, and a "Deliberator,"

which makes high-level decisions based on current and past states.

The first task researchers developed for each of these architectures

was simply to have the robot navigate the world around them.

Higher-level tasks (such as collecting Coke cans, etc) all depend on

the robot's ability to avoid obstacles. Our project will provide a

base from which higher-level functionality can be implemented on the

Nomad 200.

Approach

Our method is

modeled on three-layer architecture. The Controller in our case

consists of low-level wall avoiding and

corridor-centering behaviors. The Sequencer determines which of these

behaviors should be followed, based on sensor input. As of yet, we have

no Deliberator, but we intend to continue development by adding in

high-level maze navigation, which will

become part of the Deliberator.

Our method is

modeled on three-layer architecture. The Controller in our case

consists of low-level wall avoiding and

corridor-centering behaviors. The Sequencer determines which of these

behaviors should be followed, based on sensor input. As of yet, we have

no Deliberator, but we intend to continue development by adding in

high-level maze navigation, which will

become part of the Deliberator.

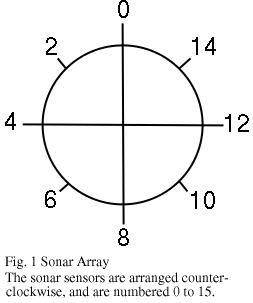

Our first

goal was to program the Nomad to move around and avoid obstacles

(low-level wall-avoidance). The

approach we took was to test the short-range infrared sensors

for nearby objects, and have the robot turn away from them. We

only examine the front half of the infrared sensor array

because we have the turret and wheels coordinated (i.e. the robot is

always moving "forward," or away from obstacles detected by the rear

array). We set a "threshold" variable to represent the closest the

robot should get to an object without taking evasive action. When the

robot has crossed this "threshold," it will slow and turn away from

the object. The speed of rotation is inversely proportional to the

distance from the object, and the forward velocity is directly

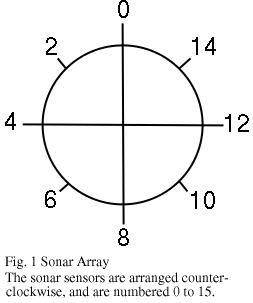

proportional to the distance from the object. If infrared sensor 0 is

triggered, the robot will

slow dramatically as it approaches the obstacle and will turn

counterclockwise. If infrared sensors 1 through 3 are triggered, the robot

will slow somewhat and turn counterclockwise. Finally, if sonars 13

through 15 are triggered, the robot will slow somewhat and turn

clockwise.

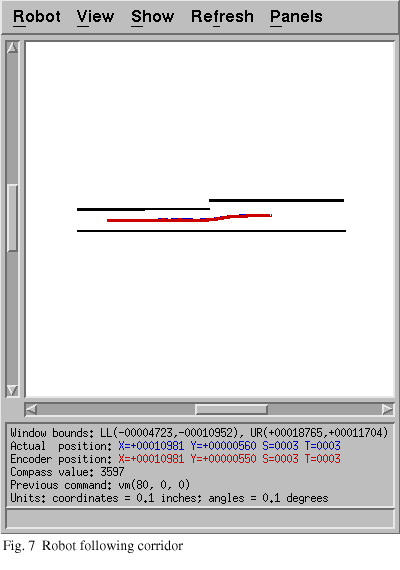

On top of this low-level wall avoiding, we have added a layer of control to

help center the robot between walls (corridor-following). This is

done by the use of proportional control

based on the difference between the distance to the walls on either

side and the angle at which the robot is moving relative to these

walls. The first type of error ("A") we examine is the difference between

the distance to the left and right walls, as measured from sonar

sensors 4 and 12. The second error ("B") we examine is the difference

between the sonar readings for sensors 13 and 11, which will let us

know the robot's approximate angle relative to the right wall. Based

on the relative sizes of these errors, we set the robot's rotational

velocity in order to direct it toward the center of the corridor.

In our implementation, we have attempted to prevent the robot from

angling itself too sharply to get to the center of the corridor,

because sharp angling will lead the robot to overshoot the center and

oscillate back and forth.

If the robot is closer to the right wall than the left wall, we have

several subcases to examine.

- If the robot is much closer to the right wall than the left, but

is not angled very sharply away from the wall, we direct the robot to

turn toward the center of the hallway (i.e. counterclockwise) at a

rotational velocity proportional to error A.

- If the robot is much close to the right wall than the left, and is

already angled fairly sharply away from the wall, we simply allow the

robot to continue on its course, rather than having it turn more

dramatically.

- If the robot is close to being directly between the two walls, we

have three more subcases.

- If the robot is angled too sharply toward the left wall, we set

its rotational velocity clockwise (proportional to error B) to

compensate and prevent it from overshooting too far.

- If the robot is at a small angle, we allow it to continue on its

course.

- If the robot is not heading toward the center of the hallway, we

set its rotational velocity to turn back toward the center,

proportional to error A.

The controls are analogous in the case that the robot is closer to the

left wall than the right wall. If the robot is directly centered, we

want to allow it to continue forward without turning. In order to aid

the corridor-following behavior, when the robot is initialized, we

direct it to rotate until it is parallel to the nearest obstacle.

Progress

We have programmed the robot to avoid obstacles while

wandering through its world. Some parameters used (such as the

threshold, default velocities, or proportional constants) provide drastically

different results when changed slightly.

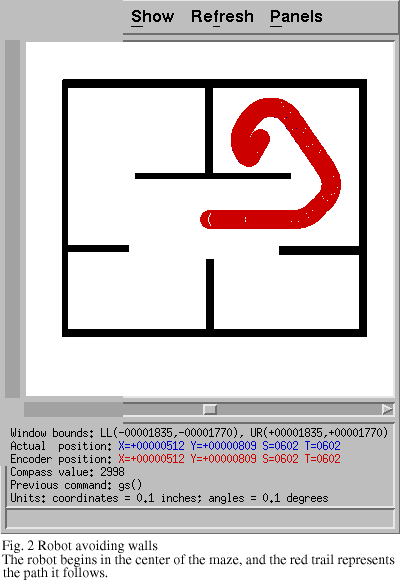

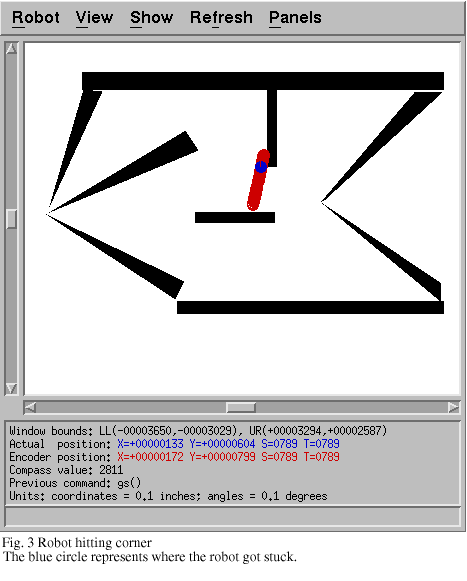

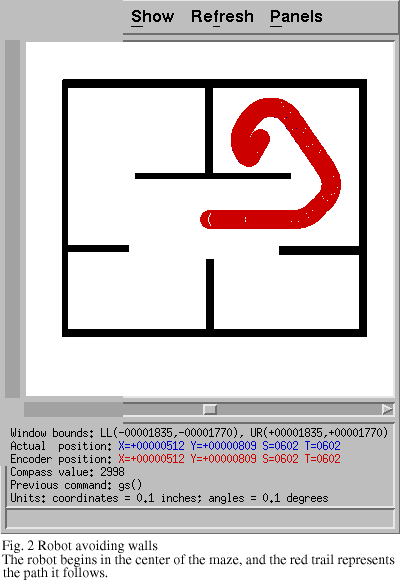

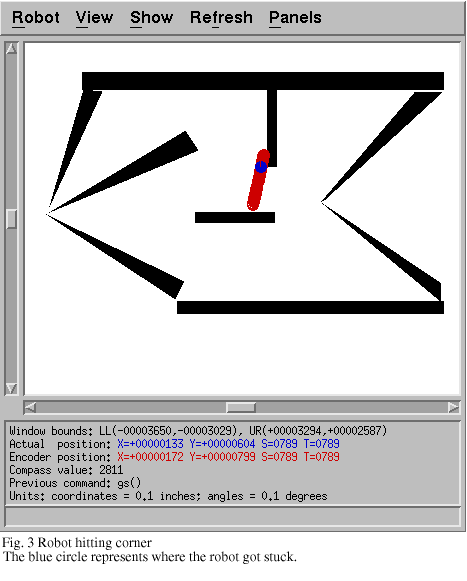

After the first week of work, the robot was able to avoid most

obstacles, but had difficulty avoiding

the corners of rectangular objects. At this point in our work, we had

only programmed the low-level wall-avoiding behavior. Figure 1 shows the robot

successfully avoiding walls, while Figure 2 shows the robot getting

stuck on a corner. It seemed to be the case that the infrared sensors have

difficulty detecting pointed objects, but we later discovered that

there had been mistake in our reading of the sensors, and in fact we

were not ever reading sensor 15. This was caused by the use of an

incorrect offset value into the robot's State array, which resulted in

reading all of the infrared sensors off by 1. This explains the robot hitting

corners, because it always hit on the corner where sensor 15 would

have detected the obstacle.

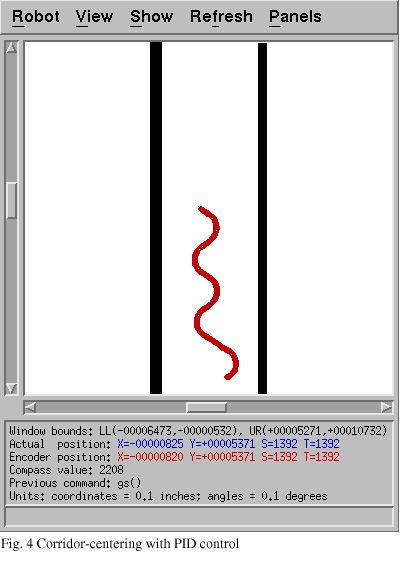

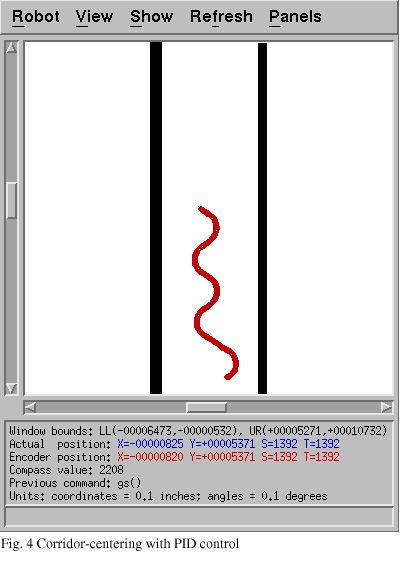

After fixing this during week two, the robot stopped running into corners.

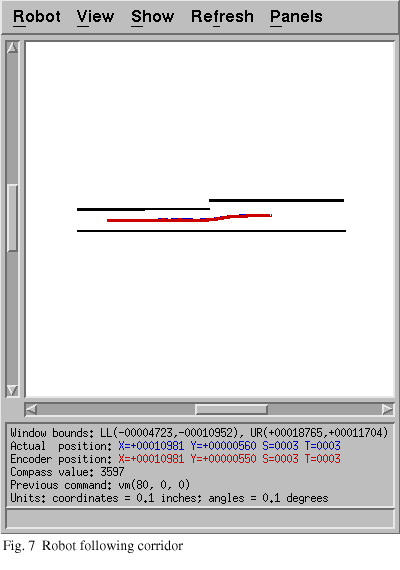

We then added support for the long-range sonar sensors. The input from the

sonar sensors is used to assist the robot in staying in the middle of hallways

(or in the most open space available). We experimented with PID

control using the position-relative commands available for the robot

rather than the velocity commands. During this stage, the robot (as shown

in Fig. 4) continuously oscillated side to side down the hallway. It

later became evident that this problem was likely due to the

implementation of the position-relative commands. Rather than

directing the robot to turn 5 degrees to the left, each time the

command was sent the robot would attempt to turn 5 more degrees to the

left from its current position. We later abandoned this approach for

a method based on setting the robot's rotation velocity.

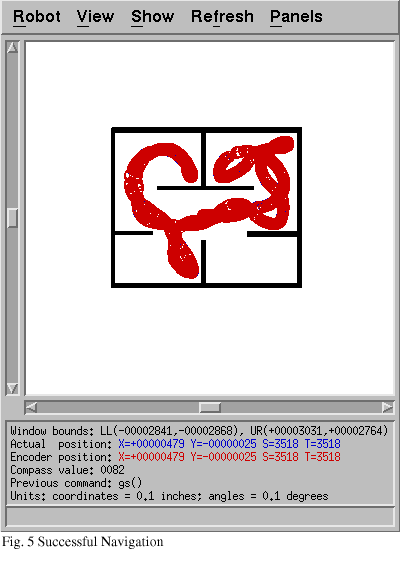

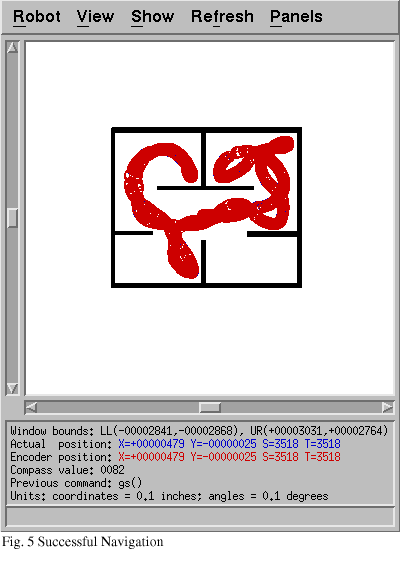

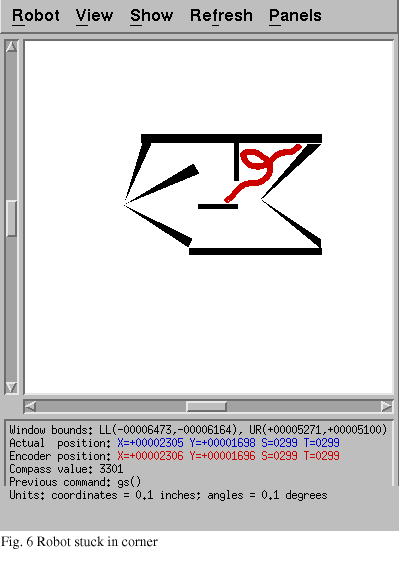

However, using the position-relative approach, the robot was

able to explore a room without hitting obstacles (Fig. 5), although a

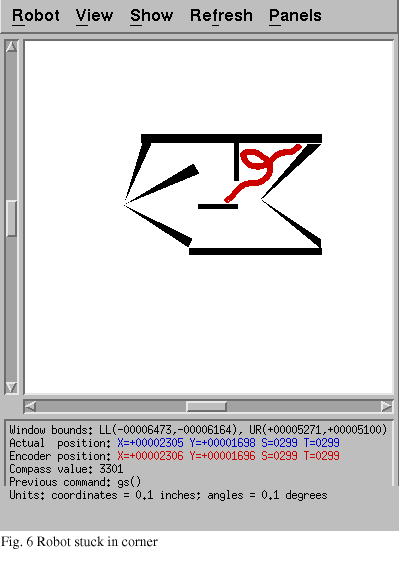

problem arose when the robot got into a corner (Fig. 6). The primary

problem is in the short-range wall-avoidance behavior. The robot senses the

wall on its right, and begins to turn counterclockwise; it then senses the

other wall on its left, and attempts to turn clockwise. As a result, the robot

becomes "stuck" in the corner, attempting to turn first one way and then

the other but never getting out. The problem is exacerbated by the

corridor-centering technique, because the angled walls in the map led the

robot to attempt to center itself between the angled walls, heading straight

for the corner. When it got to the corner, the short-range behavior took over,

and was unable to get out. This problem is still present in the final

version of our programming although it is not always repeatable, and

it appears to only be a problem when navigating between sharply angled walls.

To solve this problem, we could change the low-level behavior so that if

the robot gets too close to a wall, it gets passed a negative velocity and

simply backs away from the wall. This then introduces the problem of how to

make sure the robot does not start for the corner again as soon as it

is far enough away. However, since most worlds do not have such

angled walls, we are temporarily overlooking this issue.

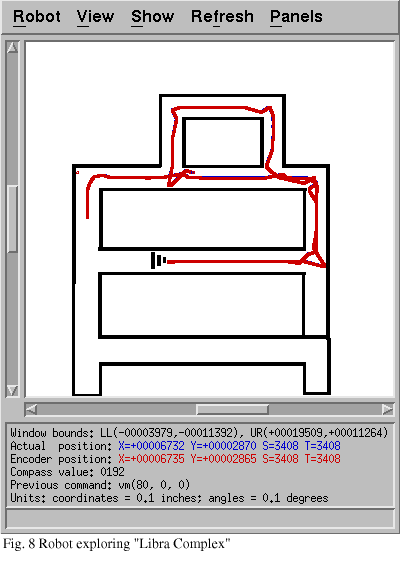

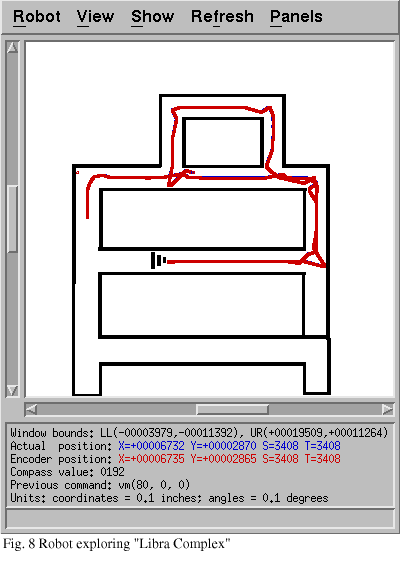

In our third week of work, we developed a new version of the

corridor-centering behavior, as described in the "Approach" section.

With the new settings, only proportional control is used rather than

PID control. With the latest settings, the robot is able to

successfully explore a map without hitting anything, and maintains its

position in the center of the hallway as long as possible. The

major problem the current program has is related to turning corners.

The lack of a decision module for corner-turning results in the robot

getting "confused" about which way to go in an intersection. With the

current implementation, depending on timing the robot may either turn

a corner or continue straight if that is an option. There may also be

a problem in a situation where the robot is in a "room" rather than a

"hallway." In this case, the robot may center itself in the room, but

likely would not be able to find a doorway. This may be solved by

implementing a wall-following module in addition to the

corridor-following function. Wall-following would also produce a

simple way to avoid the corner-turning problem. However,

wall-following does not seem to be the most intelligent approach to

navigation in a given map, which is our future goal. It seems that it

might be more useful to follow a corridor and make a decision at the

corner rather than simply following the walls until getting to the

desired location (which would take much longer).

Perspective

Overall, the Nomad is currently able to successfully navigate basic

maps, maintaining a good distance from most obstacles and never

actually hitting anything. The robot still has some problems with

turning corners efficiently and with navigating rooms with sharply

angled walls (although this problem does not seem to be reliably

reproducible). One of the key factors in assuring that the robot does

not ever hit obstacles is the use of a "buffer" zone, such that when

the robot gets within a certain distance of an obstacle, it will

completely stop moving and turn to a safe direction. With respect to

the corridor-centering behavior, a key element is detecting how

sharply the robot is angled to minimize the amount the robot

overshoots its target.

Adding more behaviors would improve the system. First among these

additions would be the introduction of a corner-turning behavior and a

deliberative layer to determine which direction to turn in an

intersection. Also, the addition of a secondary wall-following system

would be ideal for the situation in which the robot is in a large open

space. In this case, the wall-following behavior would be "turned

on," and the robot would continue in one direction until encountering

a wall to follow. This would allow the robot to reliably find an exit

from a room.

In conclusion, the current system provides a stable foundation for

expansion toward navigating to a specific point on a given map. With

some minor improvements and additions, this expansion should be within

our grasp.

References

1. Yim, Mark et al. "Walk on the Wild Side: The

reconfigurable

PolyBot robotic system." IEEE Robotics and Automation Magazine: 2002.

2. H. P. Moravec. "The Stanford Cart and the CMU Rover."

Proceedings of the IEEE: 1982.

3. Brooks, Rodney. "Achieving Artificial

Intelligence through Building Robots." Massachusetts Institute of

Technology: 1986.

4. R.A. Brooks, J.H. Connell and P. Ning. "Herbert: a

Second Generation Mobile Robot." MIT: 1988.

5. Gat, Erann. "On Three-Layer Architectures."

Jet Propulsion Laboratory, California Institute of Technology: 1998.

Acknowledgements

We would like to thank Prof. Zach Dodds for his assistance.

Our method is

modeled on three-layer architecture. The Controller in our case

consists of low-level wall avoiding and

corridor-centering behaviors. The Sequencer determines which of these

behaviors should be followed, based on sensor input. As of yet, we have

no Deliberator, but we intend to continue development by adding in

high-level maze navigation, which will

become part of the Deliberator.

Our method is

modeled on three-layer architecture. The Controller in our case

consists of low-level wall avoiding and

corridor-centering behaviors. The Sequencer determines which of these

behaviors should be followed, based on sensor input. As of yet, we have

no Deliberator, but we intend to continue development by adding in

high-level maze navigation, which will

become part of the Deliberator.