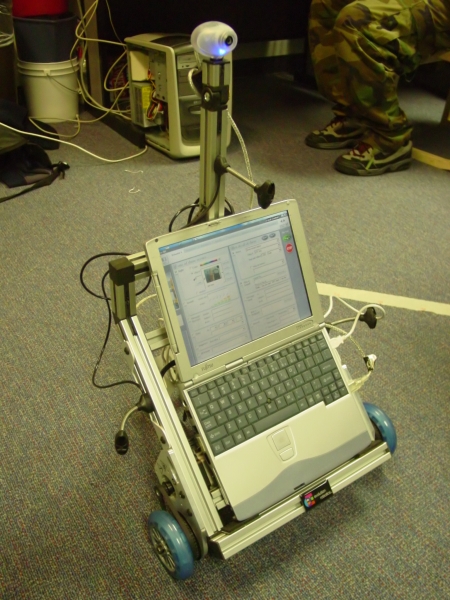

So far, we've successfully programmed a basic wandering and obstacle avoidance behavior for the ER1. We then implemented Monte Carlo localization using a pre-determined (and carefully measured) map. We've used two different APIs and are migrating to a third for this project.

For the final project, we propose to extend localization to incorporate additional sensor data not normally leveraged by the ER1 platform. Namely, we want to use 802.11 signal readings to augment IR sensor data. By using both sets of data, we expect to obtain higher accuracy and precision for localization within the map.

It relates to the MIT tour guide robot in a way that it must navigate around a specified area avoiding obstacles.

Our project relates to many mapping projects, including those involving Monte Carlo localization (described in "Monte Carlo Localization for Mobile Robots" by Dellaert, Fox, Burgard, Thrun) Their technique allows us to globally localize a robot, using relatively low amounts of memory. While we chose to do MCL, an alternate solution to localization is based on Markov models and is discussed in "Probabilistic Robot Navigation in Partially Observable Environments" by Simmons and Koenig. Yet another method is detailed in "DERVISH An Office-Navigating Robot," but that one requires quantization of the environment, which is something that would hinder our efforts to generate good 802.11 map data. We chose to use the new API for controlling the robot, because we desired the ability to control the robot using the techniques of subsumption. This method, discussed in "Achieving Artificial Intelligence Through Building Robots" by Brooks, seems more desirable than the primitive "issue command, wait for completion" cycle forced upon us by the old API. The Coastal Navigation project (as described in "Coastal Navigation - Mobile Robot Navigation with Uncertainty in Dynamic Environments" by Roy, Burgard, Fox and Thrun) has similar goals, but several distinct differences in achieving this goal. For example, their algorithm attempted to handle dynamic obstacles by assessing a probability that a sensor reading is corrupted. This is a difficulty we have chosen not to pursue. When we attempt to build and extend maps with the robot, we will probably use evidence grids created from a determined/measured sensor model (see "Robot Evidence Grids" by C Martin & H Moravec). The potential field method, described in "Experiments in Automatic Flock Control" by Vaughan, Sumpter, Frost, and Cameron, could be used to help our robot choose paths, but our initial object avoidance functioned by detecting obstacles and simply turned away from them. Since we have no limbs and all control of our robot is limited to wheel rotation, we have no need for the techniques described in "Exemplar-Based Primitives for Humaniod Movement Classification and Control" by Drumwright, Jenkins, and Mataric. The MIT Artificial Intelligence Laboratory designed and built Polly, an autonomous robot which, given a map of the laboratory, gave tours to people who asked by waving their feet. Polly was one of the first robots to use "active, purposeful, and task-based vision." In other words, Polly used vision only for very specific tasks, and didn't try to fully process images. "Specialization allowed the robot to compute only the information it needed." By only worrying about task specific information, Polly was able to perform a complex behavior using a fairly straightforward rule base. Our aim with the ER1 is similar. As Rodney Brooks pointed out, "the idea is to decompose the desired intelligent behavior of a system into a collection of simpler behaviors." We will break down a "mapping" task into several components, including basic object detection and avoidance, and then move up to wall detection and mapping. On top of that, we can layer any number of behaviors, including taking pictures of landmarks, navigating to a goal, or notifying us via email if someone is walking around the Libra complex late at night.We need to make the following modifications to the Java API in order to complete this project:

Here are a few screenshots of our program in action. There are several windows open. The top left is a control panel, which also displays current IR sensor readings and odometry numbers. The top center panel is a graphical representation of IR sensor readings. We added the orange cone here to provide a graphical representation of the wireless signal strength. The bottom panel shows the robot, map, and MCL particles. Walls are blue lines. The grey pentagon represents the ER1 and the three yellow lines represent the IR sensors. The red, orange and green dots on the map represent poor, medium, and excellent wireless signal strength respectively. Those are the readings which particles use for MCL. Particles are in light grey and pink.

The first shows the robot in an area of low signal strength, as indicated by the orange cone. The second shows the robot in an area of high signal strength. Note the pink particles which represent the top 25 percent of the MCL particles.|

|

|

|

|

|

|  |

|  |

A movie where the robot locates and follows the retail box it came in.