In the balance of the semester, we extended the MapTool code to implement FastSLAM. While we were able to write a significant amount of the code,

we were unable to successfully debug it and get it to work. However, we made a number of improvements to MapTool and quite functional support

code for FastSLAM.

The FastSLAM algorithm as described by the original paper has three steps. In the first step, each particle's robot pose hypthesis is updated

based on the robot's control sequence and a motion model with noise. The second step uses the information from the sighting of a landmark

a measurement model, each particle's current hypothesis for the spotted landmark, and each particle's robot hypothesis to perform an extended

Kalman filter update to the Gaussian distributions describing each landmark hypothesis. The third step assigns probabilities to each landmark and

resamples, hopefully drawing more heavlily from the landmarks which seem to fit the observed information. We managed to implement a working

version of step one and a faulty version of step two.

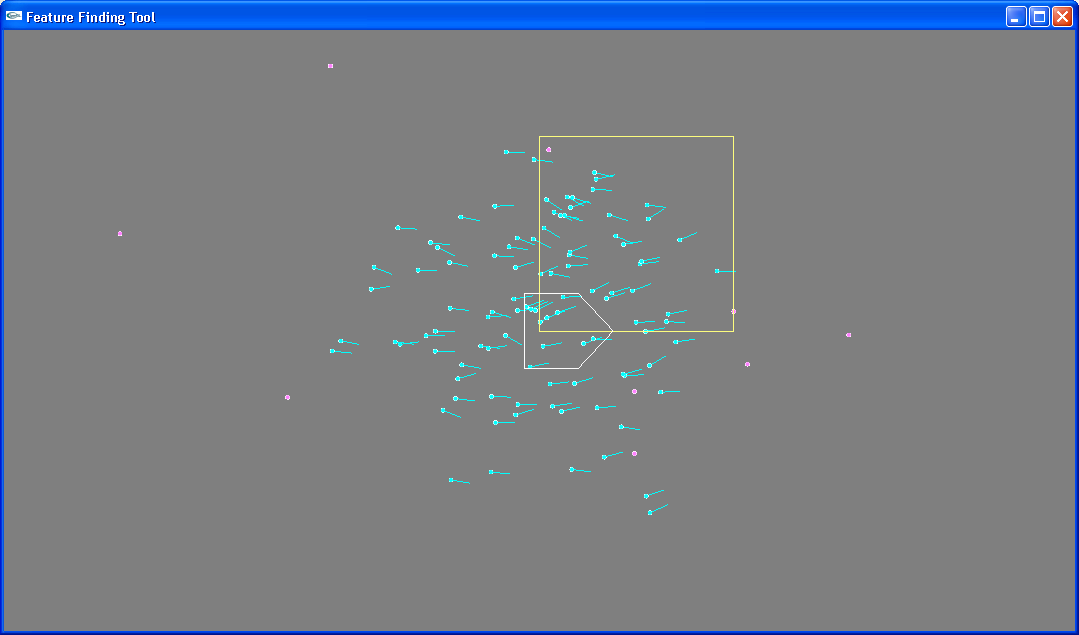

Landmark Display

We added an array of landmark hypotheses for each particle. The hypotheses begin randomly distributed over a set rectangular area.

Their display can be toggled on and off, and they appear as pink dots on the map screen.

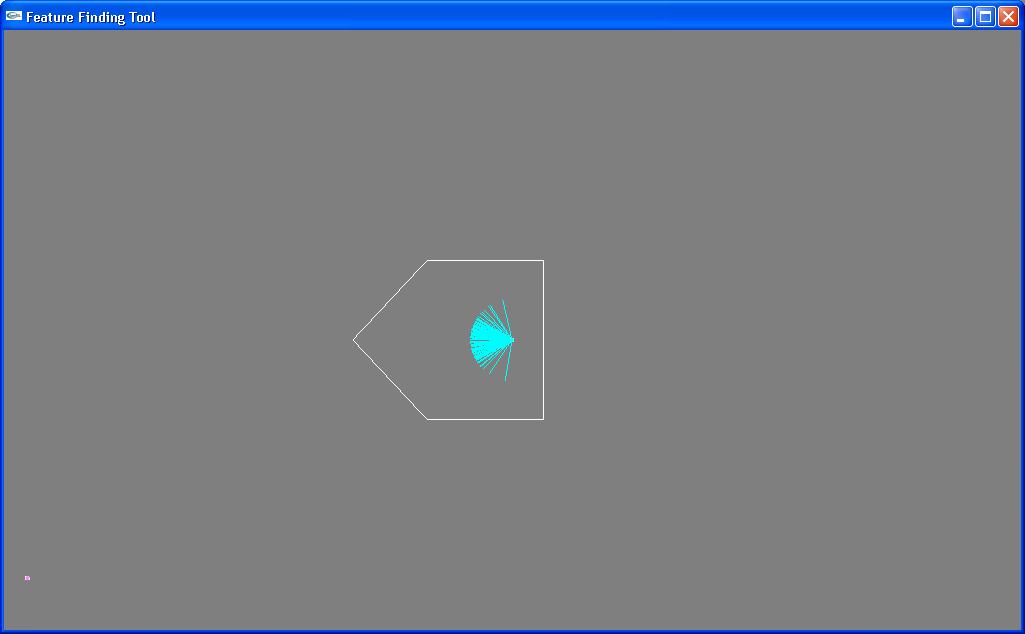

Pose Hypotheses

We added code to give each particle a robot pose a hypothesis of the robot's pose. These estimates are show in cyan on the map,

and have both an (x,y) position and an angular orientation.

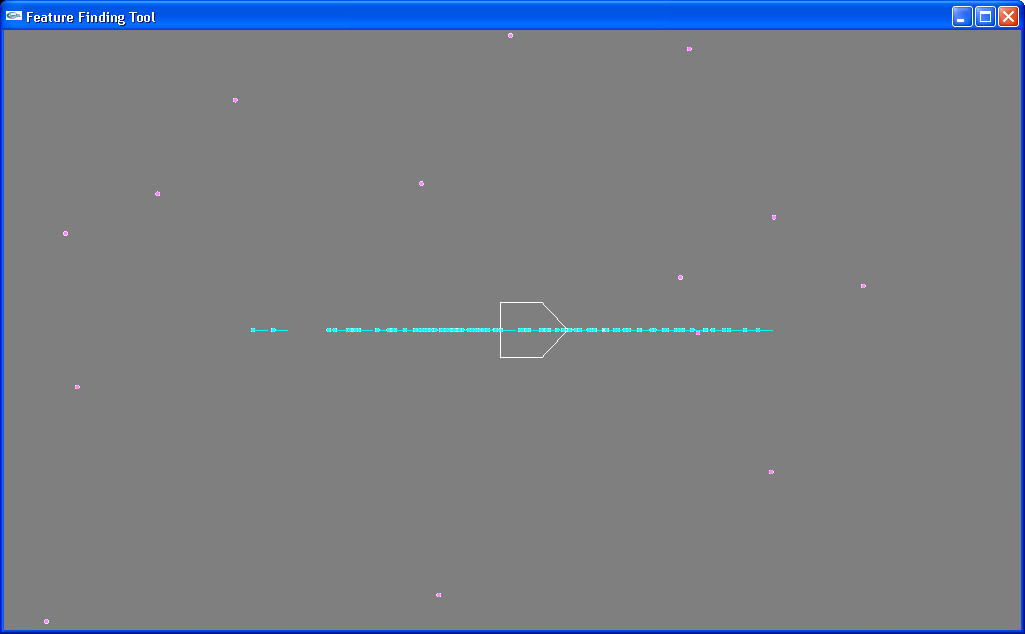

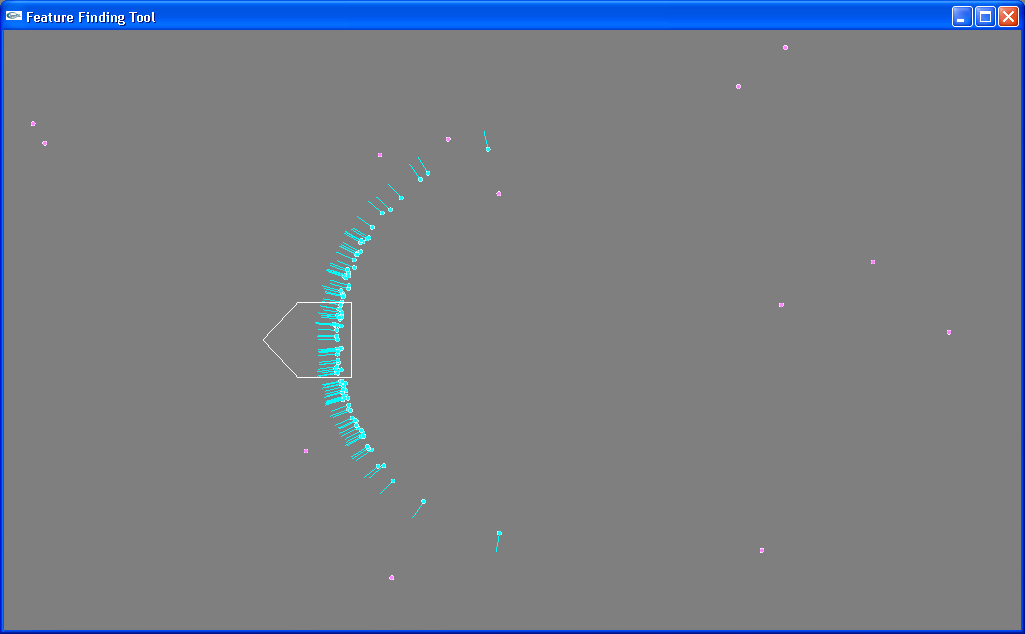

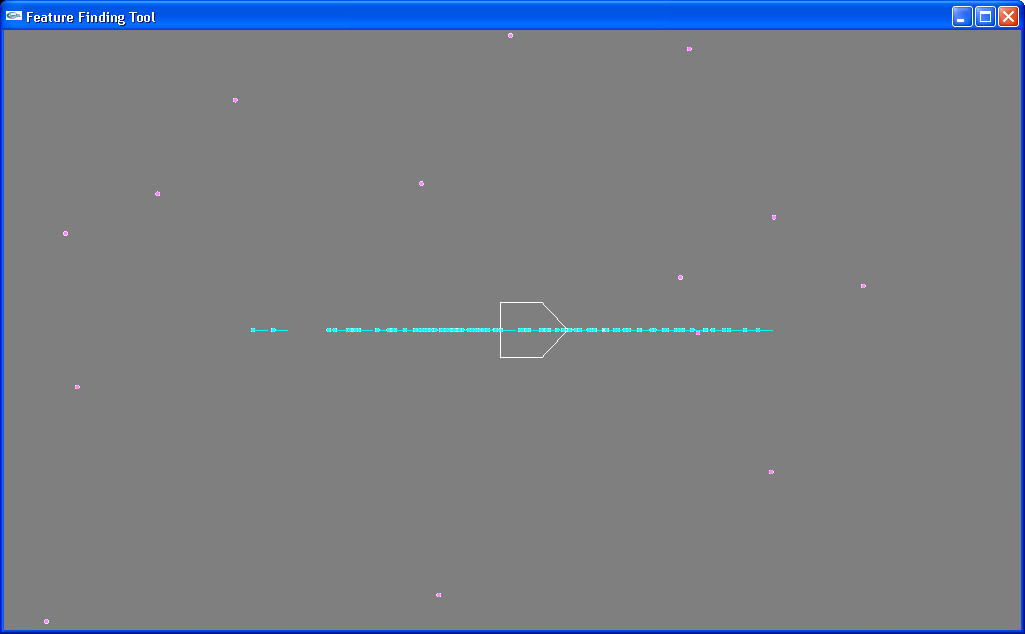

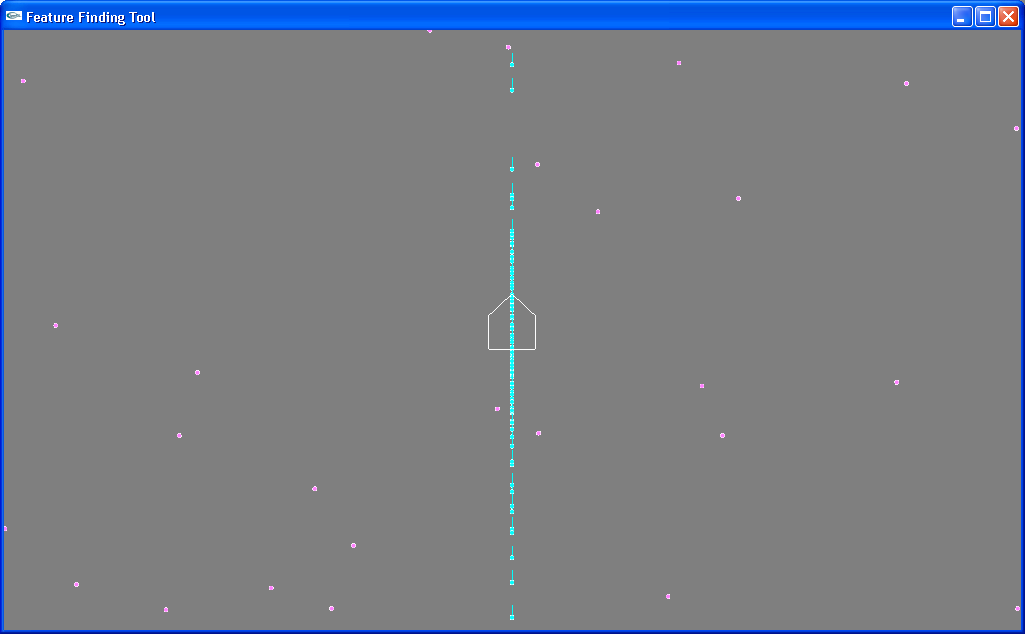

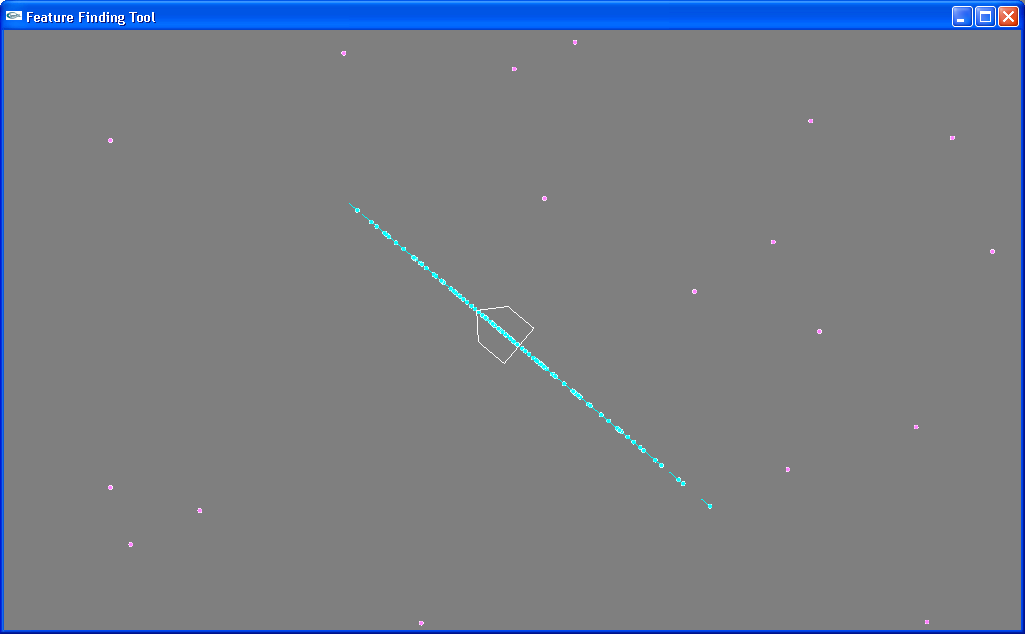

Uncertainty gathers in the three pose dimensions. Displayed here are pictures of horizontal uncertainty and vertical uncertainty after the

robot has moved back and forth along a line many times.

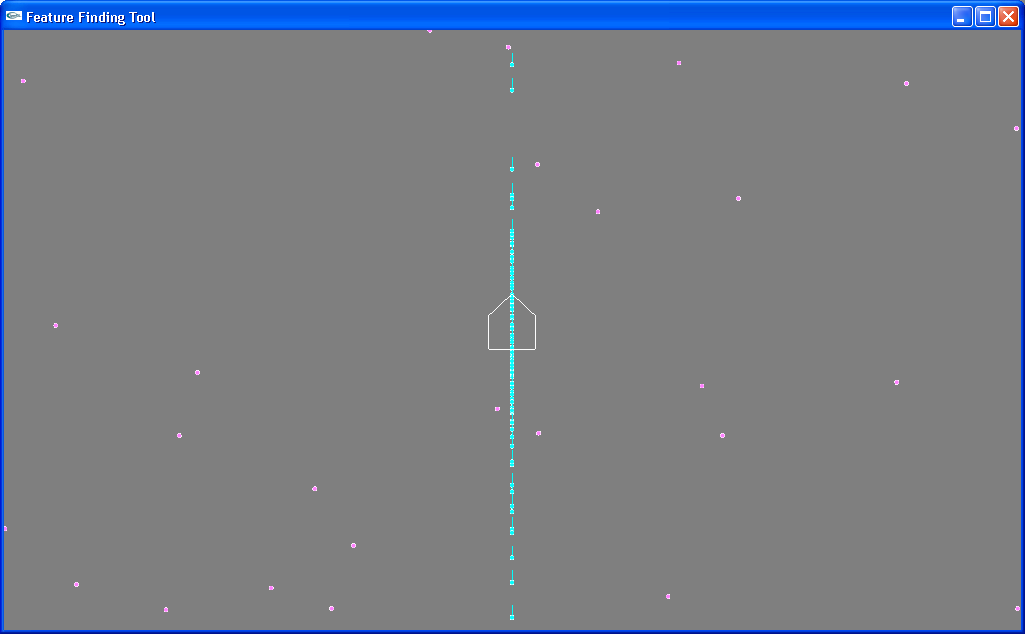

Uncertainty gathers in the three pose dimensions. Displayed here are pictures of horizontal uncertainty and vertical uncertainty after the

robot has moved back and forth along a line many times.

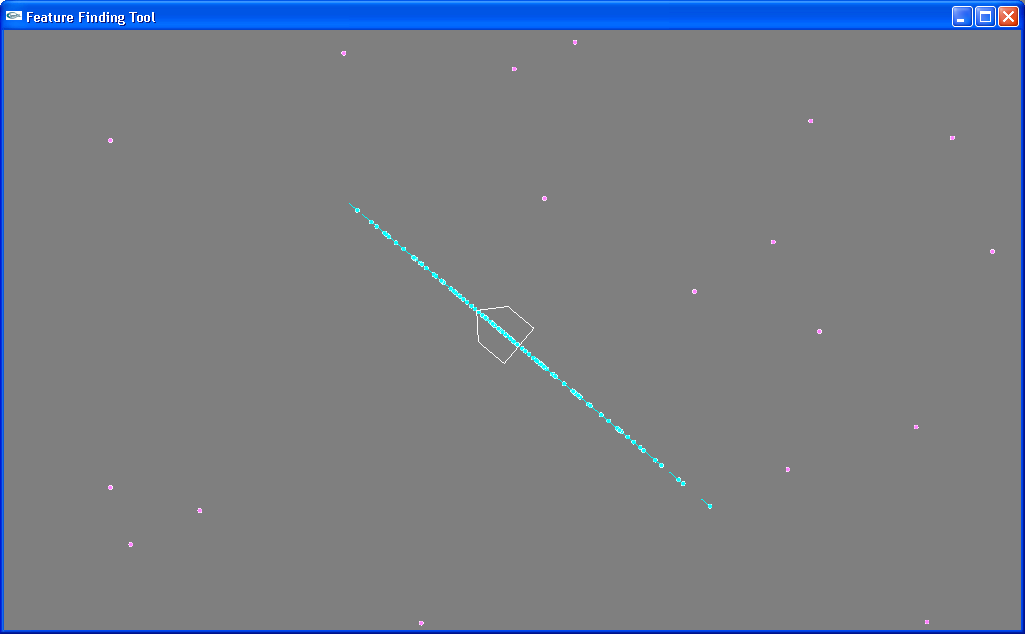

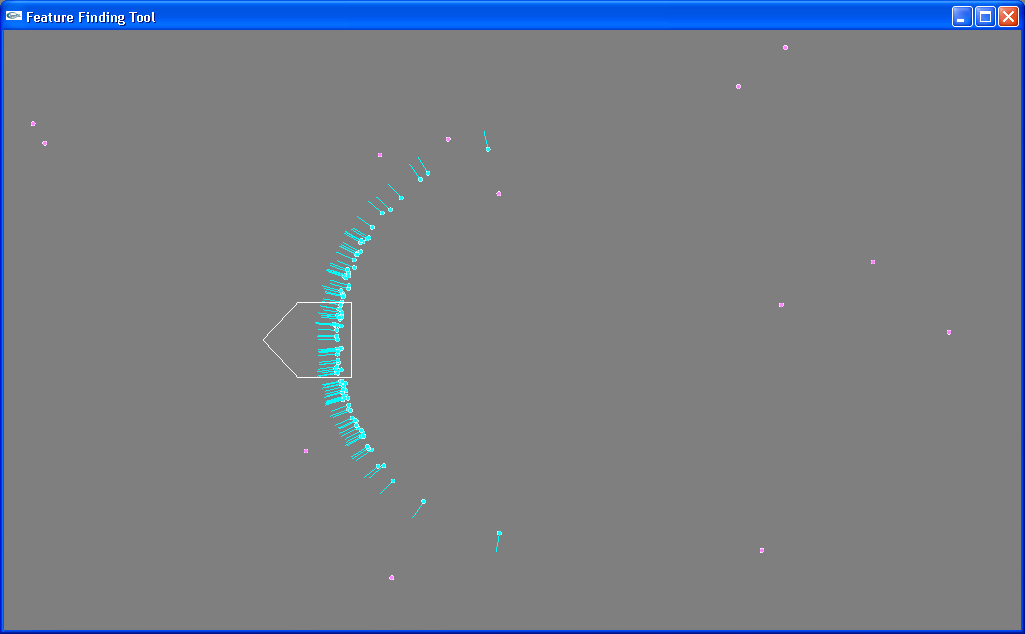

However, the uncertainty is distributed carefully so that, despite randomness, it gathers in the proper ratio given a "diagonal" movement.

However, the uncertainty is distributed carefully so that, despite randomness, it gathers in the proper ratio given a "diagonal" movement.

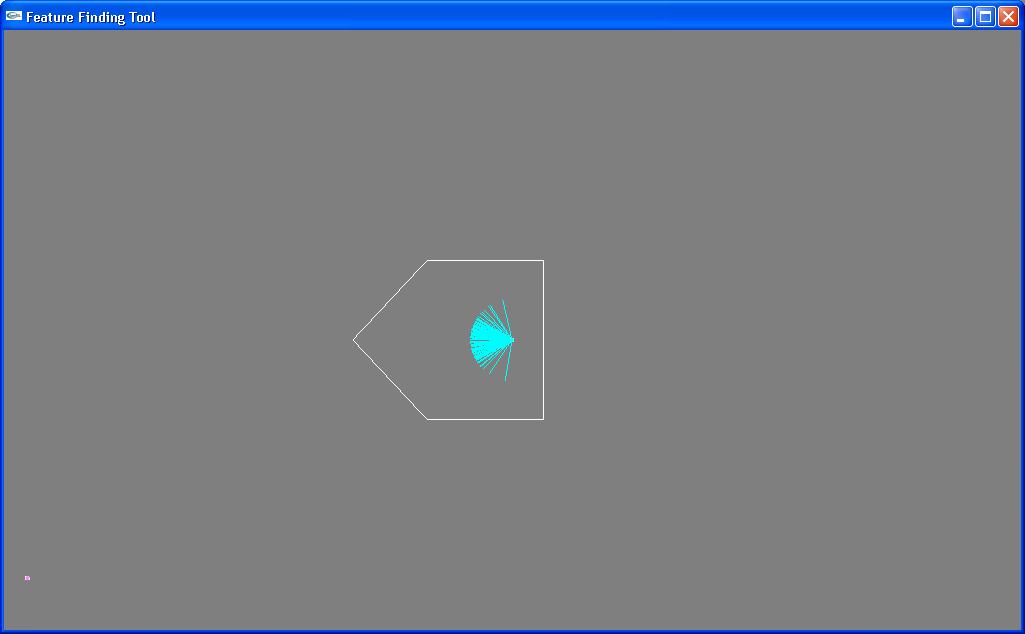

In trying to apply the robot's motion controls to these hypothesis, we found that the MapTool's method of moving the robot by translation relative to a

static (x,y) axis ("teleporting" it in a particular direction) didn't describe the robot's real motion very well. For example, if a robot hypothesis suggests

that it is facing west, but the robot's odometry currently thinks it is facing north, a robot's movement forward will teleport the hypothesis to the north

instead of moving it further west like would be correct. This problem could be corrected by doing an annoying shift of the robot's translation into a

coordinate system based on the orientation of the hypothesis pose. However, a simpler way to accomplish this same thing is to move a bit closer

o the source of the problem. The pictures below illustrate the proper movement of hypotheses with different angular poses given a particular movement.

In trying to apply the robot's motion controls to these hypothesis, we found that the MapTool's method of moving the robot by translation relative to a

static (x,y) axis ("teleporting" it in a particular direction) didn't describe the robot's real motion very well. For example, if a robot hypothesis suggests

that it is facing west, but the robot's odometry currently thinks it is facing north, a robot's movement forward will teleport the hypothesis to the north

instead of moving it further west like would be correct. This problem could be corrected by doing an annoying shift of the robot's translation into a

coordinate system based on the orientation of the hypothesis pose. However, a simpler way to accomplish this same thing is to move a bit closer

o the source of the problem. The pictures below illustrate the proper movement of hypotheses with different angular poses given a particular movement.

We changed the robot control scheme to better reflect the robot's actual controls. Instead of moving by translation relative to external coordinate

system, the robot now simply moves forward or backward, and turns left or right. This is a big more organic (well, as organic as robot motion can be)

and helps build a better understanding of what is going on with the robot's uncertainty (as well as being a better uncertainty model). It's also a lot more

fun to control in simulation.

We changed the robot control scheme to better reflect the robot's actual controls. Instead of moving by translation relative to external coordinate

system, the robot now simply moves forward or backward, and turns left or right. This is a big more organic (well, as organic as robot motion can be)

and helps build a better understanding of what is going on with the robot's uncertainty (as well as being a better uncertainty model). It's also a lot more

fun to control in simulation.

EKF Update

We attempted to implement the second FastSLAM step, updating the Gaussians for a spotted landmark. There is now some matrix multiplication support

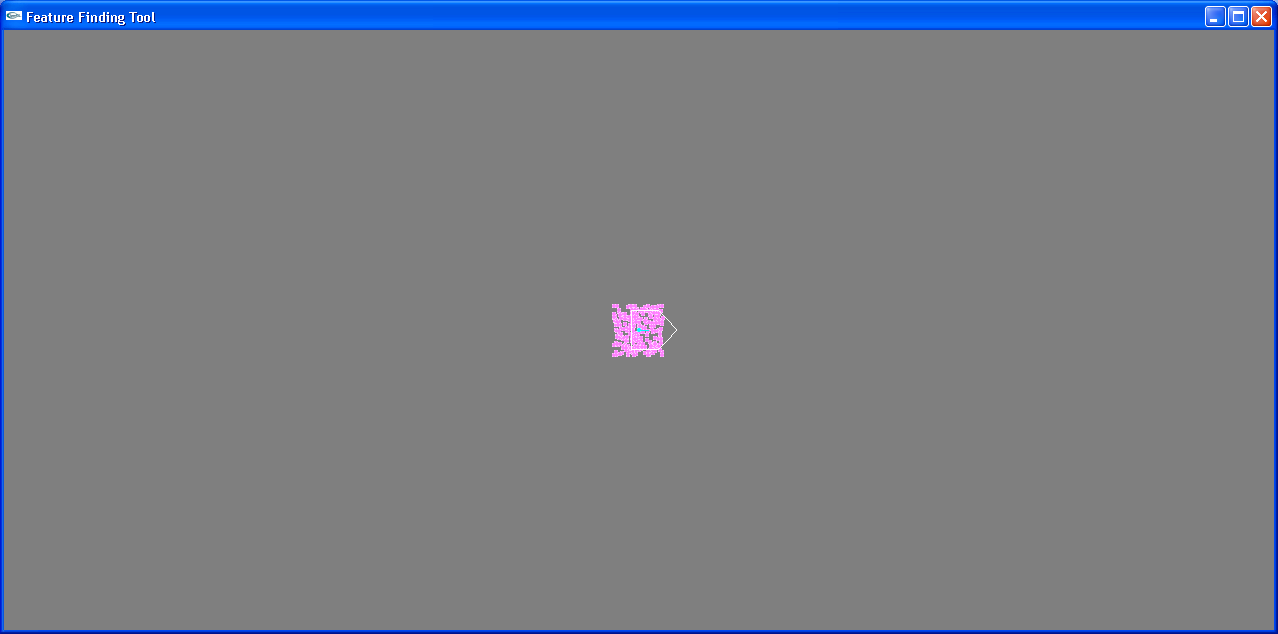

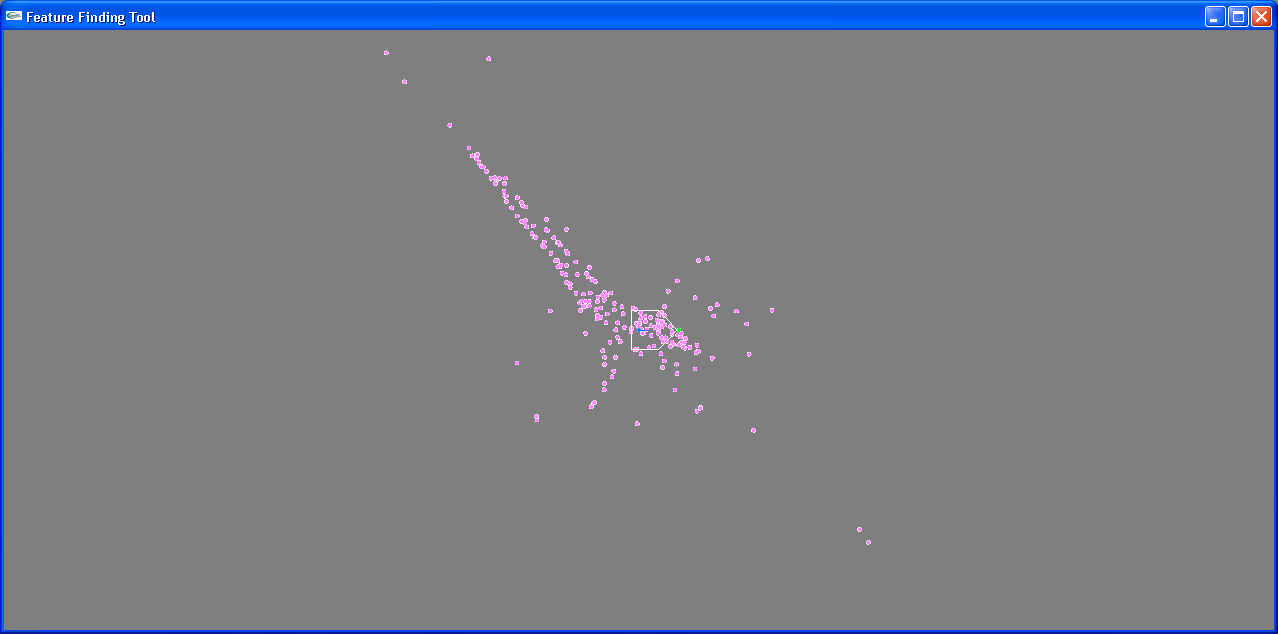

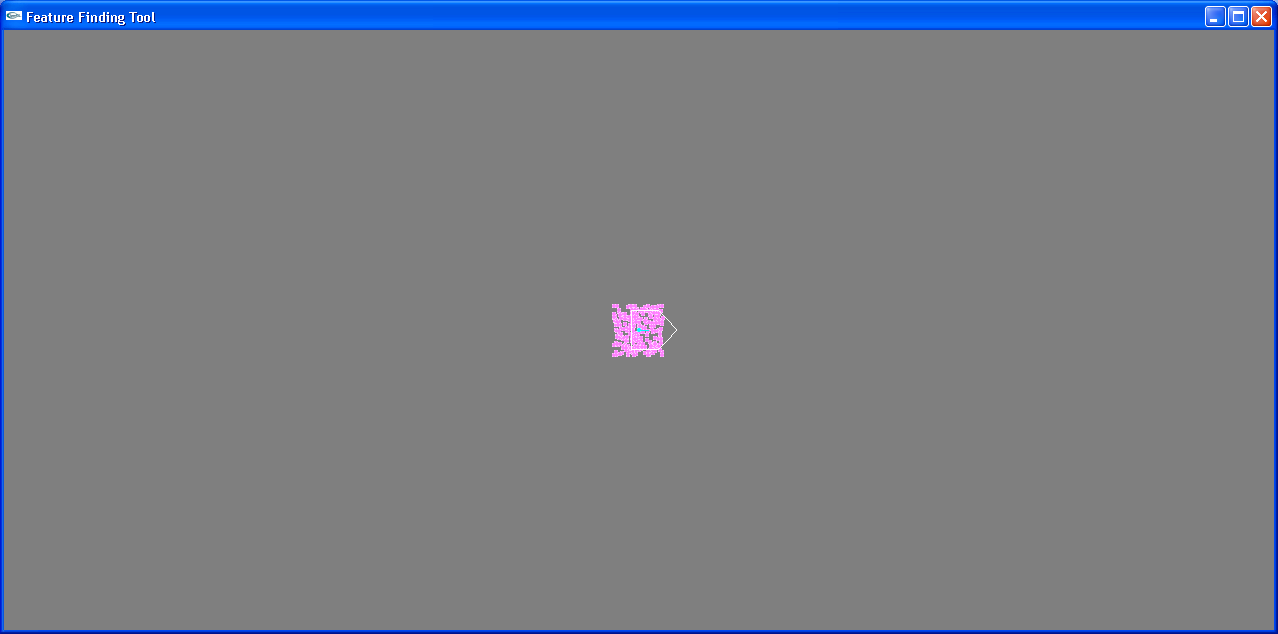

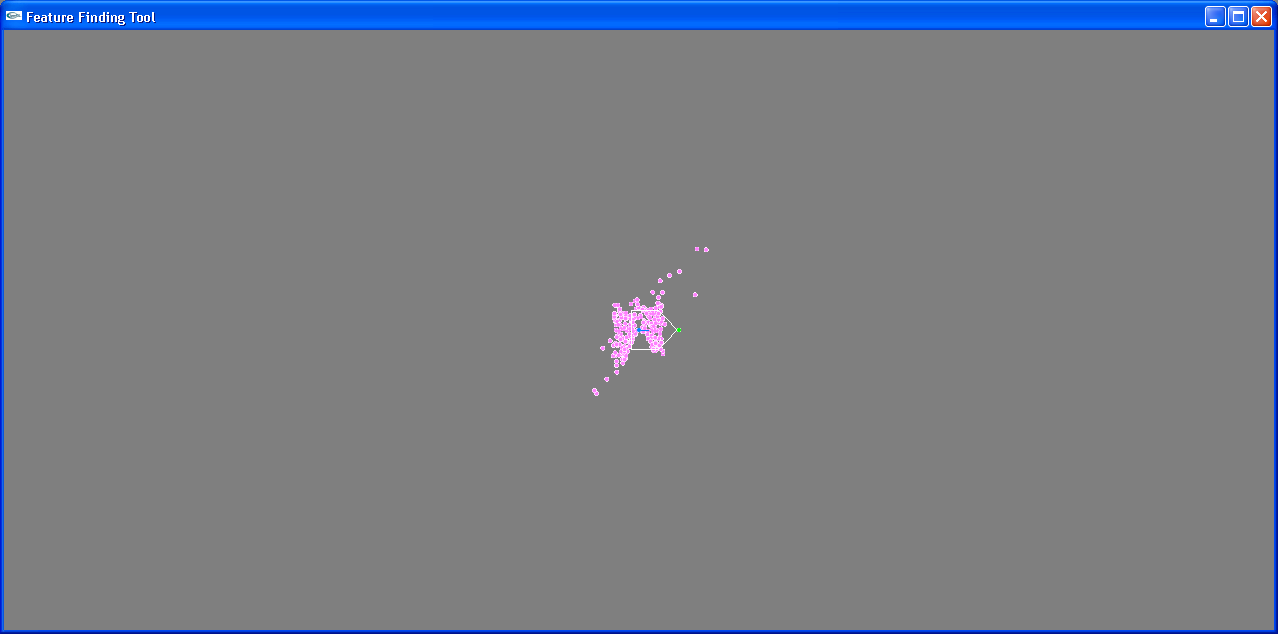

and a number of matrix calculations which attempt to do the update but are not correct. The following pictures show the current behavior. The first picture

shows the initial position of the robot and the landmarks. In order to better show the FastSLAM behavior, the particles in the simulation below each

only have one landmark (the landmark the robot will spot) and the landmark hypotheses all start out in a small area.

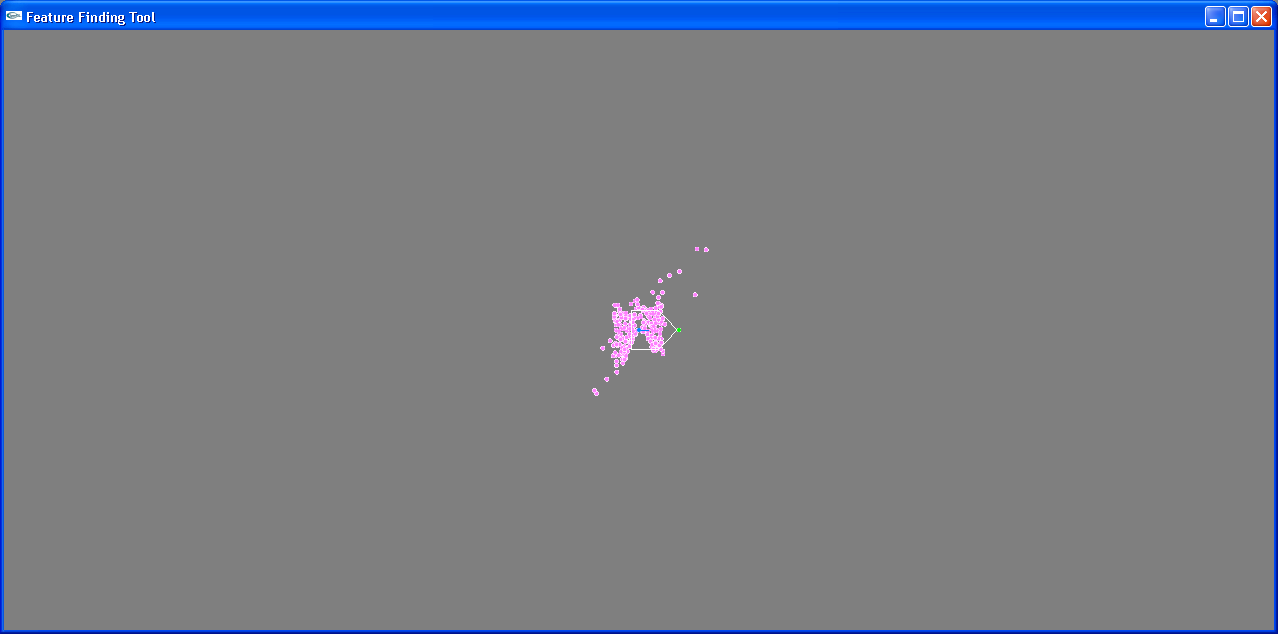

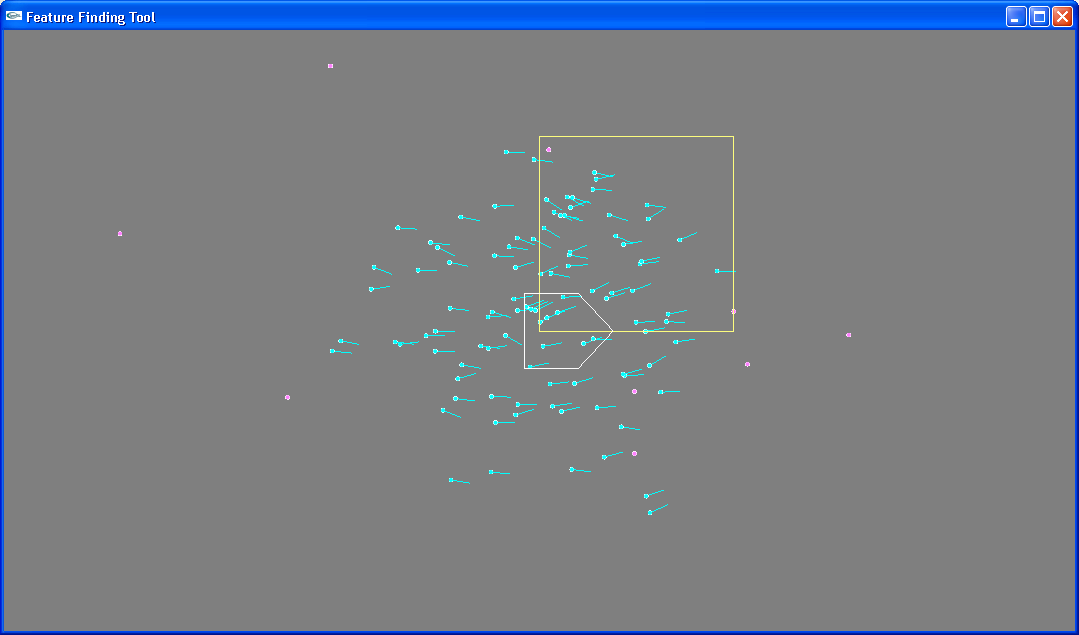

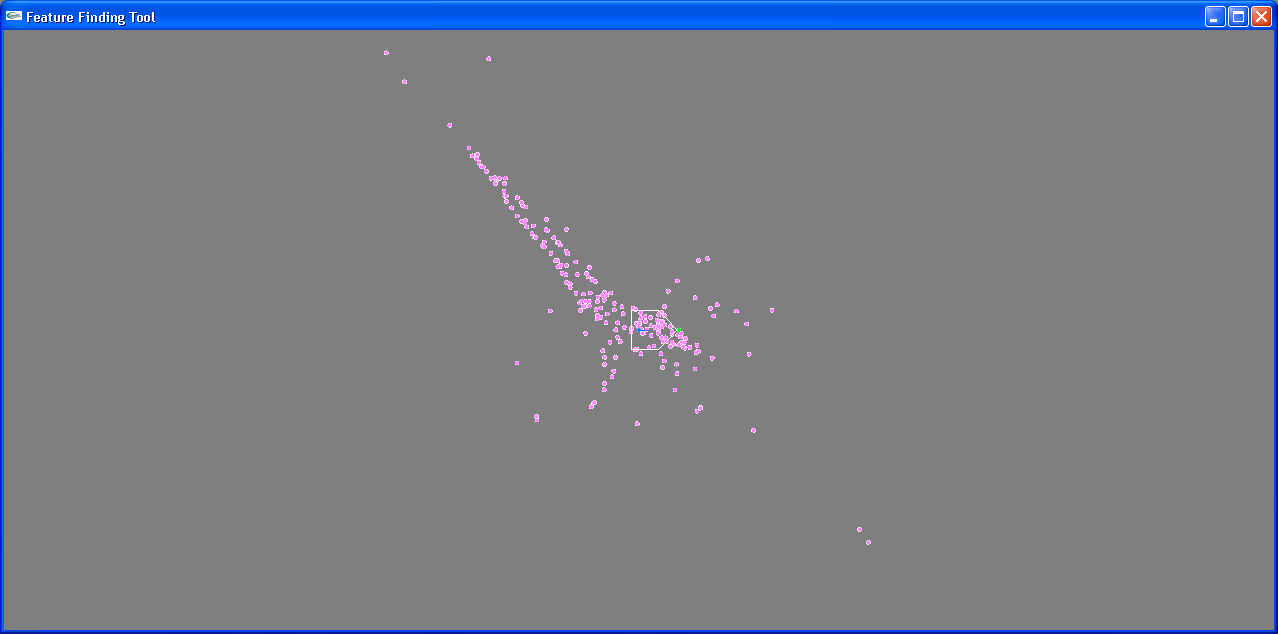

The simulation acts as if the robot spotted a landmark just in front of it, as indicated by the green dot. It repeatedly reports a sighting there, which should

have the effect of causing the landmark hypotheses to converge at that point. Instead, they crazily diverge. After one sighting, they look as follows:

The simulation acts as if the robot spotted a landmark just in front of it, as indicated by the green dot. It repeatedly reports a sighting there, which should

have the effect of causing the landmark hypotheses to converge at that point. Instead, they crazily diverge. After one sighting, they look as follows:

After five spots, they are worse:

After five spots, they are worse:

And it just goes downhill from there.

And it just goes downhill from there.

Evidence Grid

The new version of MapTool also has preliminary support for an evidence grid using the sonar data. Once the FastSLAM implementation is fully

working, the sonar history can be adjusted based on the known locations of the landmarks in order to build a better evidence grid.

With a little work, it shouldn't be too difficult to figure out the problem with FastSLAM and come up with a working implementation. The support code is

all there, it just needs to be played with a bit. The implementation here takes into account two measurement dimensions: the distance away that the

landmark is sighted at, and the angle at which it is spotted (relative to the direction the robot is facing).

The code can be found here.

Uncertainty gathers in the three pose dimensions. Displayed here are pictures of horizontal uncertainty and vertical uncertainty after the

robot has moved back and forth along a line many times.

Uncertainty gathers in the three pose dimensions. Displayed here are pictures of horizontal uncertainty and vertical uncertainty after the

robot has moved back and forth along a line many times.

However, the uncertainty is distributed carefully so that, despite randomness, it gathers in the proper ratio given a "diagonal" movement.

However, the uncertainty is distributed carefully so that, despite randomness, it gathers in the proper ratio given a "diagonal" movement.

In trying to apply the robot's motion controls to these hypothesis, we found that the MapTool's method of moving the robot by translation relative to a

static (x,y) axis ("teleporting" it in a particular direction) didn't describe the robot's real motion very well. For example, if a robot hypothesis suggests

that it is facing west, but the robot's odometry currently thinks it is facing north, a robot's movement forward will teleport the hypothesis to the north

instead of moving it further west like would be correct. This problem could be corrected by doing an annoying shift of the robot's translation into a

coordinate system based on the orientation of the hypothesis pose. However, a simpler way to accomplish this same thing is to move a bit closer

o the source of the problem. The pictures below illustrate the proper movement of hypotheses with different angular poses given a particular movement.

In trying to apply the robot's motion controls to these hypothesis, we found that the MapTool's method of moving the robot by translation relative to a

static (x,y) axis ("teleporting" it in a particular direction) didn't describe the robot's real motion very well. For example, if a robot hypothesis suggests

that it is facing west, but the robot's odometry currently thinks it is facing north, a robot's movement forward will teleport the hypothesis to the north

instead of moving it further west like would be correct. This problem could be corrected by doing an annoying shift of the robot's translation into a

coordinate system based on the orientation of the hypothesis pose. However, a simpler way to accomplish this same thing is to move a bit closer

o the source of the problem. The pictures below illustrate the proper movement of hypotheses with different angular poses given a particular movement.

We changed the robot control scheme to better reflect the robot's actual controls. Instead of moving by translation relative to external coordinate

system, the robot now simply moves forward or backward, and turns left or right. This is a big more organic (well, as organic as robot motion can be)

and helps build a better understanding of what is going on with the robot's uncertainty (as well as being a better uncertainty model). It's also a lot more

fun to control in simulation.

We changed the robot control scheme to better reflect the robot's actual controls. Instead of moving by translation relative to external coordinate

system, the robot now simply moves forward or backward, and turns left or right. This is a big more organic (well, as organic as robot motion can be)

and helps build a better understanding of what is going on with the robot's uncertainty (as well as being a better uncertainty model). It's also a lot more

fun to control in simulation.

The simulation acts as if the robot spotted a landmark just in front of it, as indicated by the green dot. It repeatedly reports a sighting there, which should

have the effect of causing the landmark hypotheses to converge at that point. Instead, they crazily diverge. After one sighting, they look as follows:

The simulation acts as if the robot spotted a landmark just in front of it, as indicated by the green dot. It repeatedly reports a sighting there, which should

have the effect of causing the landmark hypotheses to converge at that point. Instead, they crazily diverge. After one sighting, they look as follows:

After five spots, they are worse:

After five spots, they are worse:

And it just goes downhill from there.

And it just goes downhill from there.