One of the most fundamental problems in robotics is developing robots that can navigate through the world without damaging themselves or others. There have been many different approaches to accomplishing this task dating from the early days of robotics (such as the Stanford Cart experiments and "Shakey" the robot). In order to solve higher-level problems, test robots must first be taught to safely move through their world.

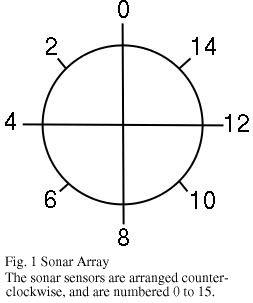

Once the robot can safely wander through the world around it, the problem becomes teaching the robot to navigate from place to place efficiently. The goal of this project is to program the Nomad 200 to travel from a given location to another given location using an abstraction of a map of the surrounding area. The Nomad 200 has arrays of sonar and infrared sensors mounted on a turret, which can be used to navigate around obstacles. The Nomad has three synchronized wheels, which are controlled by a single rotational/translational velocity parameter or an angle/distance parameter. The Nomad is limited to traveling on relatively flat ground, unlike such robots as the PolyBots[1], which are designed to mimic natural creatures and travel over diverse terrains.

In the previous lab we programmed the Nomad to wander through a map (following corridors) without running into obstacles. The current project involves developing an abstraction of the environment and implementing a decision layer to direct the robot through the environment along the shortest path to its goal. We are using a simulation program to test our code without risking damage to the robot. The code can then be downloaded onto the robot to test in real-world situations.

The first approach to robot navigation/architecture was the Sense-Plan-Act (SPA) approach[2]. In this method, the robot develops a representation of the world around it using sensors ("sense"), makes a plan of action based on this representation ("plan"), and carries out that action ("act"). The main problem with this approach is that during the time when the robot is planning, the world may change significantly (i.e. if there are moving elements in it). However, this approach was used somewhat successfully by researchers at Stanford, although it was most successful in very simple human-made "worlds."

Rodney Brooks took a new approach to robot architecture with the "subsumption" method[3]. Brooks advocated the development of insect-like robots, which reacted in direct response to sensor input (eliminating the "plan" stage). Such robots would have a default "wander" behavior that was "subsumed" by behaviors such as "run away" if the robot got too close to an obstacle. The problem with this method is that the more things that the robot is capable of, the more complicated the subsumption design gets, until eventually a limit is reached. The can-collecting robot developed in 1988[4] is considered to be the extent of what can be accomplished with this architecture.

More recently, Erann Gat described a three-layer architecture, which includes elements of both SPA and subsumption[5]. In this approach, the robot has a "Controller," which implements low-level behaviors, a "Sequencer," which determines the order in which to carry out those behaviors, and a "Deliberator," which makes high-level decisions based on current and past states.

Our method is modeled on three-layer architecture. The Controller in our case consists of low-level wall avoiding, corridor-centering behaviors, and corner-turning. The Sequencer determines which of these behaviors should be followed, based on sensor input. The Deliberator finds the path the robot should follow to its goal, and conveys the directions to the Sequencer. The Sequencer then tells the Controller which way to turn at corners, or that the robot should simply go straight, using its lower-level behaviors.

In lab A, we developed the low-level wall-avoidance and the corridor-centering behaviors. A complete description of the approach we took can be found here.

Our approach to the development of the higher-level behavior of the robot is similar to that taken by Nourbakhsh et. al.[6] in the development of the office-navigating robot DERVISH. Other approaches depend on more than basic sensor input, such as rapidly expanding random trees [7]. In the random tree approach, a path in a map is found, and the robot is then directed precisely how far to move in each direction based on that path. The approach used with DERVISH and used here is to form an abstraction of the surrounding environment and use sensor input to determine the current position and whether the robot is going in the right direction or not.

Unlike Hans Moravec's evidence grids approach[8], which uses sonar readings to get a detailed view of the surroundings, our robot only needs a vague idea of the surrounding world in order to navigate through the abstracted map.

The first step to implementing path-following is implementing a reliable corner-turning behavior. Our approach to turning corners is to begin to turn in the specified direction until the robot has turned more than 65 degrees, then move forward until the robot enters the new corridor completely, based on readings of the left and right sonars.

The Cells are assembled into a Map through the use of a descriptive file. An example map is shown in Figure 2. Red lines indicate divisions between Cells, and black lines indicate walls or barriers.

We employ a breadth first search (BFS) algorithm[9] to compute the shortest path from a start Cell to a goal Cell. The path is represented by a numeric series of Cells from start to end.

The robot begins at the specified start Cell, and begins facing East. Once it moves, it keeps track of the last Cell it was in and the direction in which it is moving. If there is a transition from detecting a corridor on either side to no longer detecting a corridor, or vice versa, the robot has passed into a new Cell. Based on the side on which the transition occurred, the direction of motion, and the previous Cell, the robot can determine its new State. For example, if the robot begins in Cell 9, and in its next Cell it detects a corridor only on the right, the robot can determine that it has passed into Cell 10. However, if it detects a corridor on both sides, it has passed into Cell 8. The current direction it is traveling helps get rid of ambiguities caused by symmetry of the Cells on either side. Should the robot find itself in a Cell that does not match the map, then it assumes that it made a mistake somewhere along the way and aborts. A more robust implementation would involve assuming that the robot is still on the path, but that the environment has changed (i.e., a door opened, or a new obstacle was placed in the robot's path). The map would then be altered to reflect this change, and a new path computed starting at the robot's current Cell.

The robot is given its start Cell, a goal Cell, the abstracted Map, and the path computed by BFS (a series of Cell numbers). It begins by establishing in which the direction the next Cell on the path is located, and then rotates to point in that direction. It begins moving along the path, checking to see if it has entered a new Cell as it travels. If the robot enters a new Cell, it first checks to determine what Cell it is in. This is accomplished by establishing whether there are walls or corridors on the robot's left and right sides. If the surroundings match the previous Cell that the robot was in, then it is assumed that the robot is still in that Cell, and at least one of the sonar readings must have given a false reading. Otherwise, the robot's surroundings are compared to the Cell that is the next adjacent Cell in the direction that the robot is currently traveling. If these do not match, then it is assumed that the robot lost its path, and the program exits. If they do match, then the new Cell is returned as the robot's current location. If this location does not match the path towards the goal, then ideally the robot would recalculate a path to goal from its current location. However, this was not implemented in the interest of time. Instead, the robot halts and the program exits, having lost the path. If the current Cell is on the path to the goal, then the number of the next Cell on the path is retrieved, and its location relative to the the current Cell is established. This is all accomplished by the Deliberator. The Sequencer then establishes whether the robot needs to turn to go to the next Cell, and if so, in what direction. It then runs the appropriate low-level behaviors in order to turn or go straight, depending on where the path goes. This continues until the robot reaches the end state, or the robot halts prematurely.

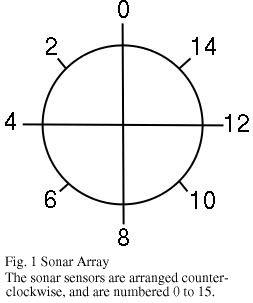

We have implemented corner-turning, map abstraction, path computation, and path-following. The corner-turning works to a certain extent, but has some bugs that appear when the robot is not instructed in which direction to turn by the decision layer of the Deliberator. Figure 2 shows the robot wandering through the map, turning at every possible corridor (favoring left). The wobble after the second turn is due to the manner in which the robot detects when to begin a turn. Since the robot ends its left turn at less than a 90 degree angle, it senses the hallway on its right, and since it tries to turn at every opportunity, it begins to turn to the right. However, it is thwarted by the wall, and then detects too much space on the left and begins to turn to the left. In addition to adding the Deliberator, this problem was minimized by redefining the end of the turn to be the point where the robot detects walls on either side, not just on the side that it turned on.

Upon integrating decision making into the program, this problem did in fact disappear. In the above case, should the decision module instruct the robot to turn to the left, then robot would turn as before, but when the turn is completed, it tells the robot to continue with its corridor-centering behavior, rather than attempting to turn at every possibility.

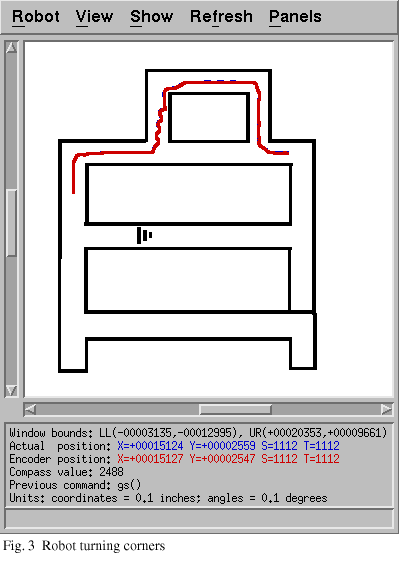

The implementation of the map abstraction and the path computation appears to work without error. Figure 4 shows a series of sample runs on the map in Figure 2.

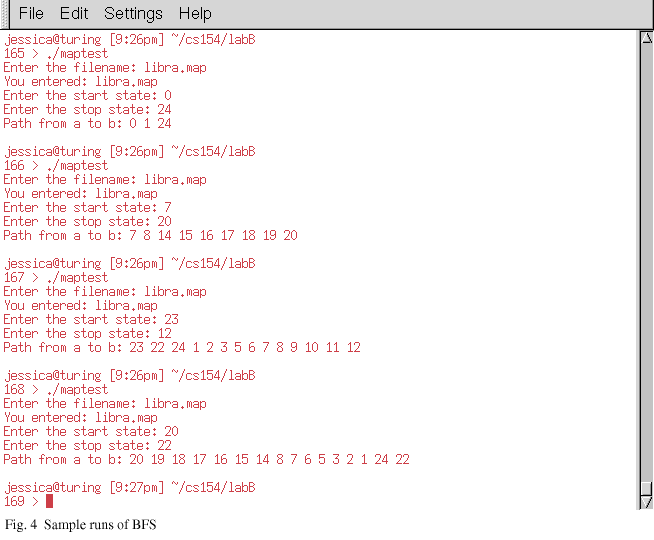

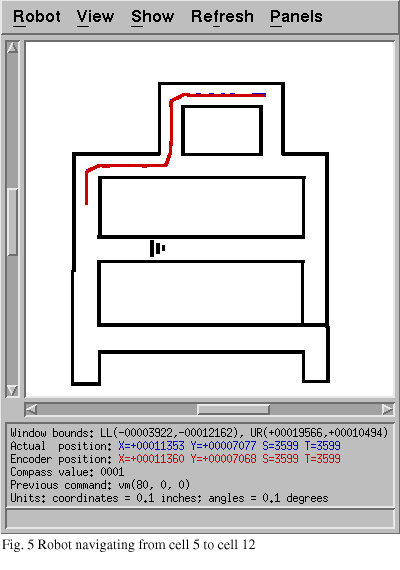

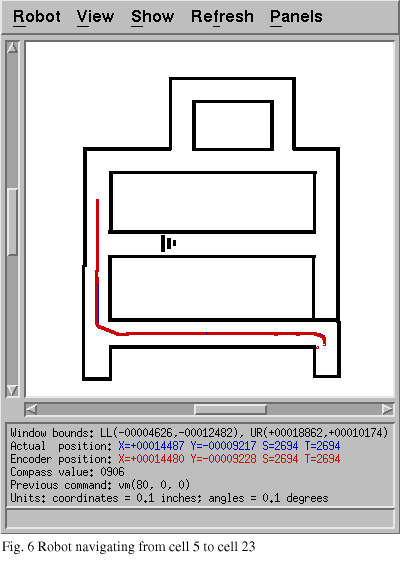

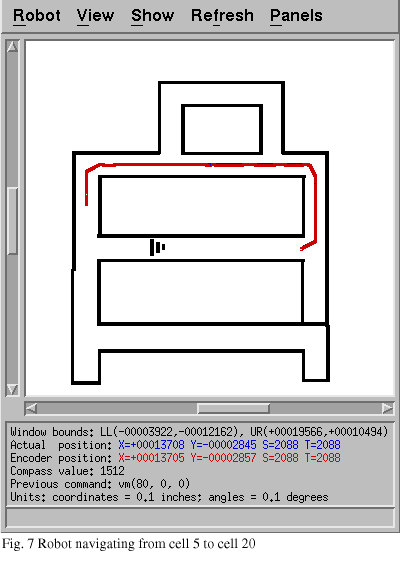

In the final stage of the project, we implemented path-following using the abstracted map and path calculated by BFS, as described in the approach section. It runs with varying degrees of success, sometimes managing to turn a corner with the corridor-centering behavior instead of recognizing that it has reached a new Cell, and should recalculate its current Cell. This appears to be dependant on the time it takes to go through its program loop. In such cases, the robot aborts its attempt to reach the goal. Figures 5, 6, and 7 show successful runs of the robot. In each case, the robot begins in Cell 5, and successfully travels to the goal. One minor drawback of our implementation is that the robot halts as soon as it detects that it is in the goal Cell. Since it calculates its current Cell as soon as it enters the Cell, this means that the robot halts before it is completely inside the goal Cell. This can be seen in all three of the runs shown. Perhaps a more reasonable approach would be to have the robot move forward once it has calculated that it is in the goal Cell, so as to ensure that it is completely in the Cell before stopping. We did not test the robot in any environments other than those consisting only of hallways, as it is not designed to be able to navigate through rooms to their exits.

The Nomad is able to perform the basic task set before it of navigating from a given location to another given location. We have not implemented all the extra cases to handle unexpected objects, but this would not be a particularly difficult addition. In the simulator, at times the timing of the loops leads to erratic behavior, but we are fairly confident that on the actual robot this would not be as much of a problem.

One of the key factors in making the robot follow the path properly was adjusting the Sequencer so that the corridor centering function is not called until the sensors directly to the rear of left and right (sonar sensors 5 and 11 in Fig. 1) detect walls, as well as the sensors to the sides and to the front of the sides (sensors 3 and 13). In this way, we are guaranteed that the robot will not begin to adjust its position in the corridor based on inaccurate data about its angular error (i.e. the front side sensor sees a wall, but the back side is still in the corridor, or vice versa). This discrepancy caused the robot to mistakenly think it had changed states before we adjusted it to assure that the robot is completely in the hall before calling the corridor-centering function. Another key factor was coming up with a feasible abstract representation of the map and determining the proper times to recalculate the current Cell.

The first thing that could be done to improve our system is the addition of cases to avoid unexpected obstacles in the hallways. Other additions might include more sophisticated detection of changes in the current Cell or modifications to allow the robot to function in a large room full of obstacles, rather than in a map of hallways.

One of the possible continuations of this line of study might be to create a messenger robot, which could use a wireless interface to take orders from people in the building (office building or college department), and in order travel to the room it was called to, take a message or an object, and go to the next specified room. This could be quite useful in an office environment. In general, navigation in a known map is very practical for uses in office environments or school environments, though it would not be particularly applicable for exploring new territories.

1. Yim, Mark et al. "Walk on the Wild Side: The

reconfigurable PolyBot robotic system." IEEE Robotics and Automation

Magazine: 2002.

2. H. P. Moravec. "The Stanford Cart and the

CMU Rover." Proceedings of the IEEE: 1982.

3. Brooks, Rodney. "Achieving Artificial

Intelligence through Building Robots." Massachusetts Institute of

Technology: 1986.

4. R.A. Brooks,J.H. Connell and P. Ning.

"Herbert: a Second Generation Mobile Robot." MIT: 1988.

5. Gat, Erann. "On Three-Layer

Architectures." Jet Propulsion Laboratory, California Institute of

Technology: 1998.

6. Nourbakhsh, Illah et al. "DERVISH: An

Office-Navigating Robot." AAAI: 1995.

7. S. M. LaValle and J. J. Kuffner.

"Rapidly-exploring random trees: Progress and prospects." In

B. R. Donald, K. M. Lynch, and D. Rus, editors, Algorithmic and

Computational Robotics: New Directions 2001:293-308.

8. Martin, Martin C. and Hans P. Moravec.

"Robot Evidence Grids." Carnegie Mellon University: 1996.

9. Cormen, Thomas et al. "Introduction to

Algorithms." 2nd ed. MIT: 2001:531-539.